Powerful Aggregation Framework of MongoDB

As it’s known, MongoDB is a document-oriented database with lots of powerful features and advantages. One of those powerful features is the aggregation framework of MongoDB.

The main purpose of the aggregation framework is to process data records and return computed results. When using aggregation pipelines, it’s possible to group documents, perform a variety of arithmetical and other operations, perform left join within a database, merge collections, and bunch of similar cool things.

The structure of the aggregation pipeline

For performing aggregations, it’s required to have a pipeline, which consists of many stages. Documents sequentially pass through the stages, transforming the document with each stage and passing it onto the next stage. Stages can contain various pipeline operators for working with arrays, strings, dates, etc.

When initiating an aggregation, it’s enough to run the following mongo shell command:

db.getCollection(collectionName).aggregate(pipeline)

where collectionName is the name of the collection on which the aggregation pipeline needs to run and pipeline is an aggregation pipeline(array of stages).

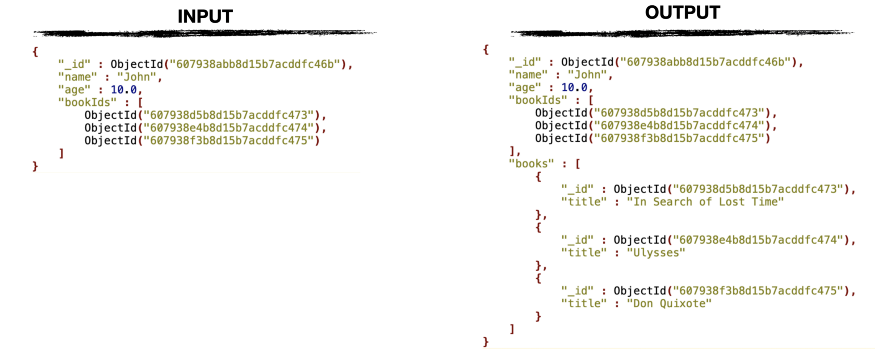

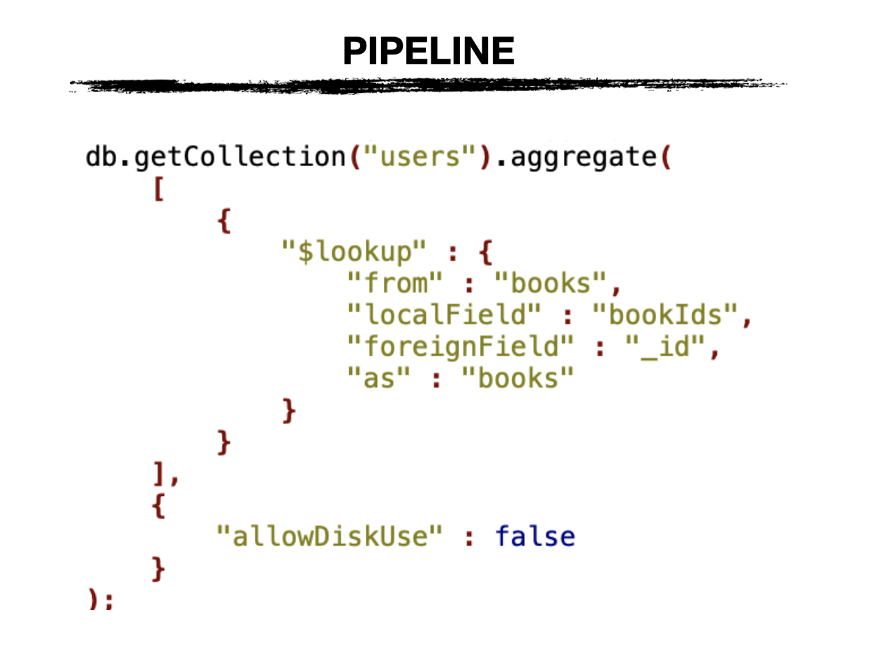

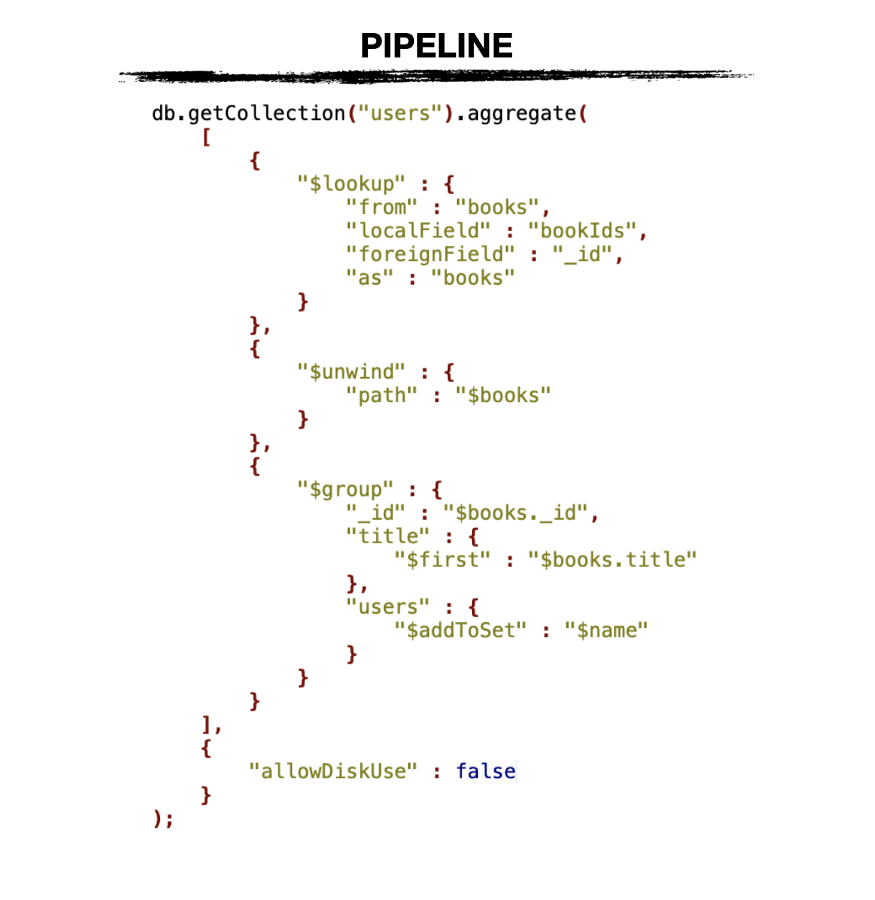

Left join using $lookup

$lookup stage lets you “join” a collection by performing an equality match between fields of current and “joined” collections. This stage requires specifying some key options such as collection and field names.

In this case, we need to add a new array of books to the documents of the current collection by selecting documents from the book collections based on the books' IDs array.

where:

from : “joined” collection name

localField : local field name which will be used for an equality match

foreignField : field name in “joined” collection which will be used for an equality match

as : output field name

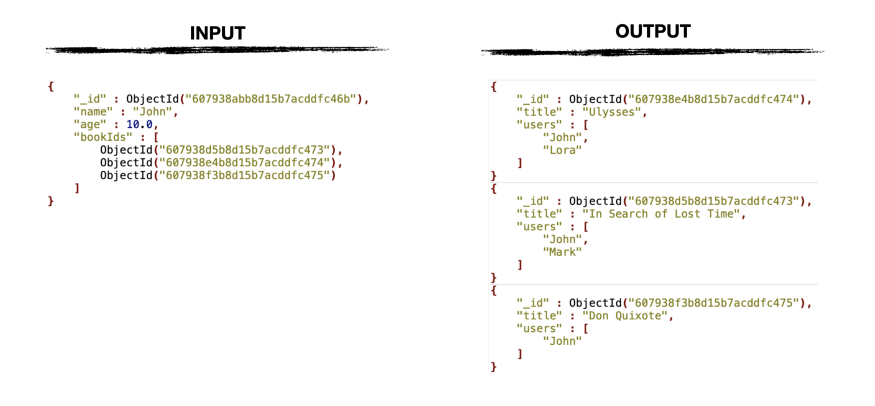

Categorizing documents using $group and $unwind

There are lots of cases when we need to categorize documents based on some criteria and one of the ways to do this is by using $unwind and $group stages.

For example, let’s say that there are users who have specified the list of their favorite books. We need a pipeline that will create separate documents for each book where each document will contain a list of users who like that book.

Since user documents only contain object IDs of their favorite books, in the pipeline above, we used $lookup stage to bring full objects of books into the books array.

By using $unwind stage we deconstruct user documents and reconstruct them by using $group stage. Inside the $group stage, we accumulate user names into the users array.

Categorizing documents using $bucketAuto

Another stage that lets you categorize documents is $bucketAuto. It automatically defines buckets based on given parameters and groups documents based on min/max ranges.

In this case, we want to categorize users based on their book count. As you can see, there are three buckets defined in the output section where each bucket has min and max ranges:

bucket 1 [1, 2)

bucket 2 [2, 4)

bucket 3 [4]

There are two stages used in the pipeline above, $addFields which adds book counts for each user document, and $bucketAuto which groups documents by booksCount field.

In the $bucketAuto stage specified the following fields:

groupBy : the field name by which documents should be grouped (can be an expression as well)

buckets : maximum count of buckets

output : an output document specification

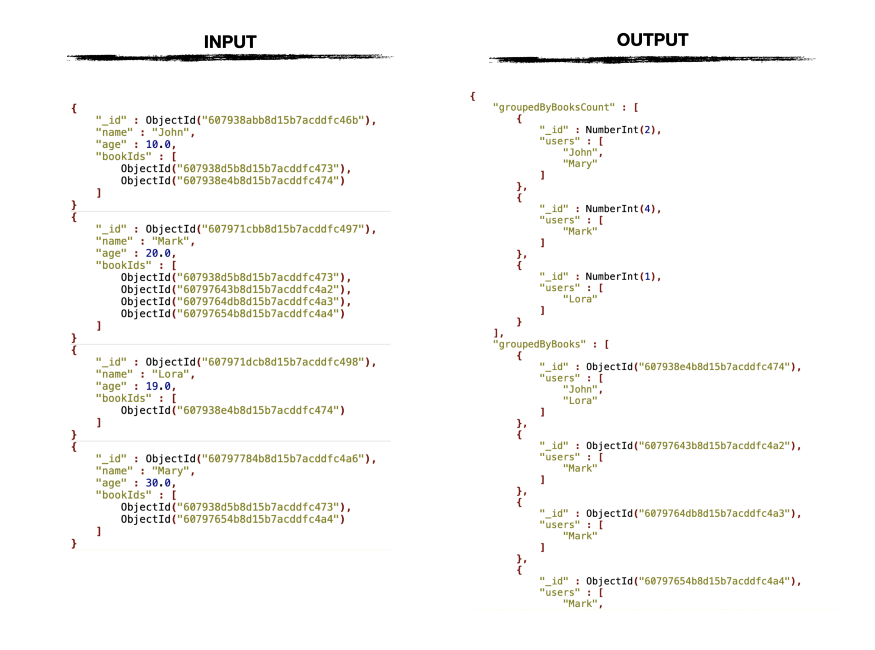

Multiple pipelines in a single stage using $facet

For this case, we want to combine multiple pipelines into a single stage. As an output of aggregation, we need to have two arrays of grouped users, one grouped by book count and another grouped by book IDs.

In the pipeline above, in $facet stage, we defined two pipelines named groupedByBooksCount and groupedByBooks. After executing, the output of each pipeline will be stored in the appropriate key.

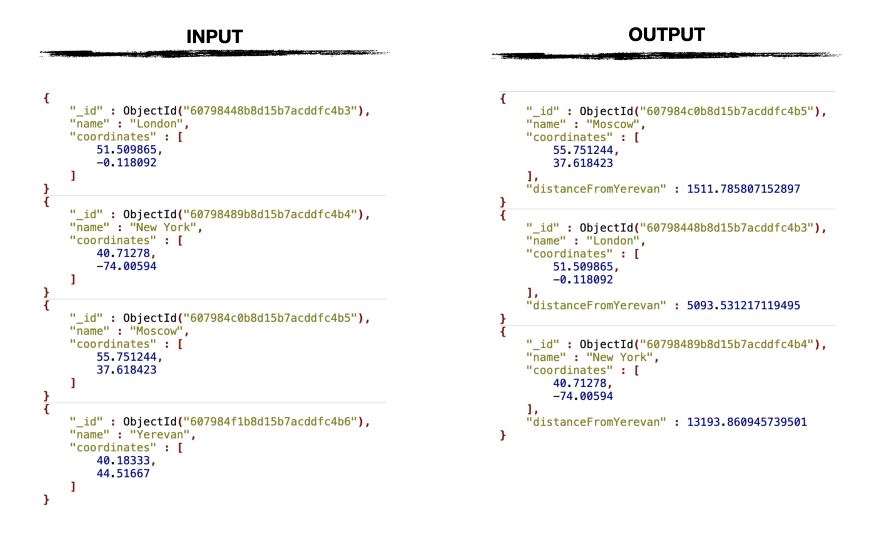

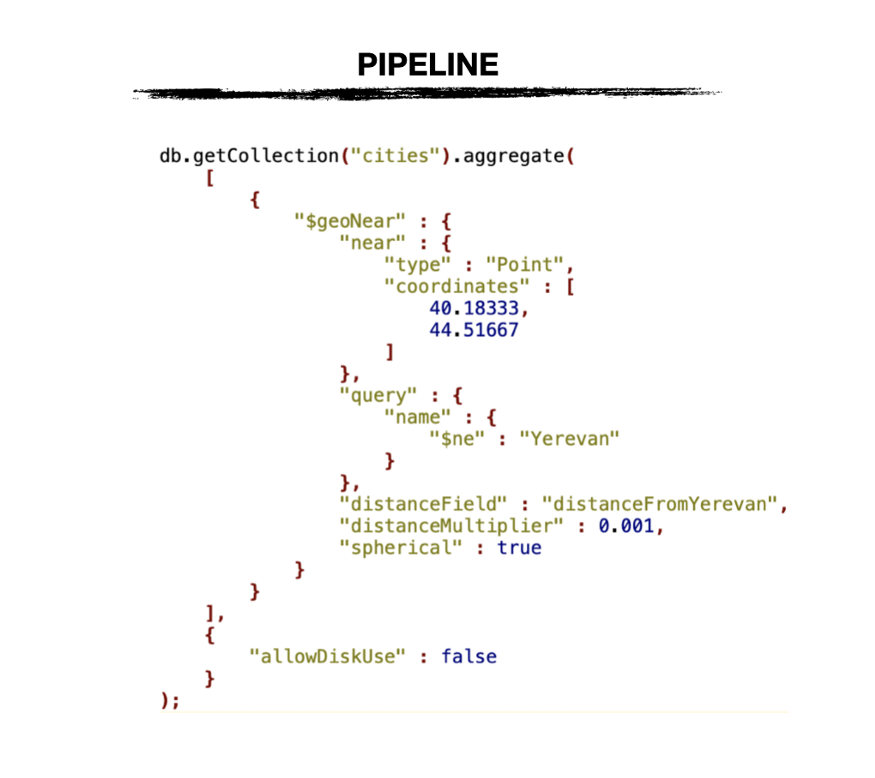

Location queries using $geoNear

As it’s said in the documentation, $geoNear output documents in order of nearest to farthest from a specified point.

In this example, we have a collection called cities where each document contains a name and the coordinates of a specific city. One of the cities in that collection is Yerevan. We need to add a new field to all the documents that will tell the distance between Yerevan and the current city location.

In the pipeline above, the coordinates array contains the geographic coordinates of Yerevan. Value of distanceField is a field name that will contain distance between two cities and distanceMultiplier is for converting distance from meters to kilometers.

Note: To use $geoNear stage, on the coordinates field must be created 2d or 2dsphere index.Recursive search using $graphLookup

Using $graphLookup it’s possible to perform a recursive search on a collection. It recursively populates all the connected documents based on specified fields.

In this example $graphLookup operation recursively matches to the reportsTo and name fields in the users' collection, returning the reporting hierarchy for each user:

In the pipeline above there are three stages:

$graphLookup — recursively finds related documents and outputs them into reportingHierarchy array

$unwind — deconstructs and adds to reportingHierarchy array

$group — reconstructs documents and overrides existing reportingHierarchy array leaving only user names

Storing pipeline output to a collection using $out

This stage takes the documents returned by the aggregation pipeline and writes them into a specified collection.

Note: The $out stage must be the last stage in the pipeline

In this specific example, we added $out stage at the end of the pipeline that will save the output of the last stage into distances collection in the same database.

Conclusion

In this article, we covered very few features of the aggregation framework of MongoDB. For deep learning, I suggest having a look at the official documentation and do some practice work using a test database.